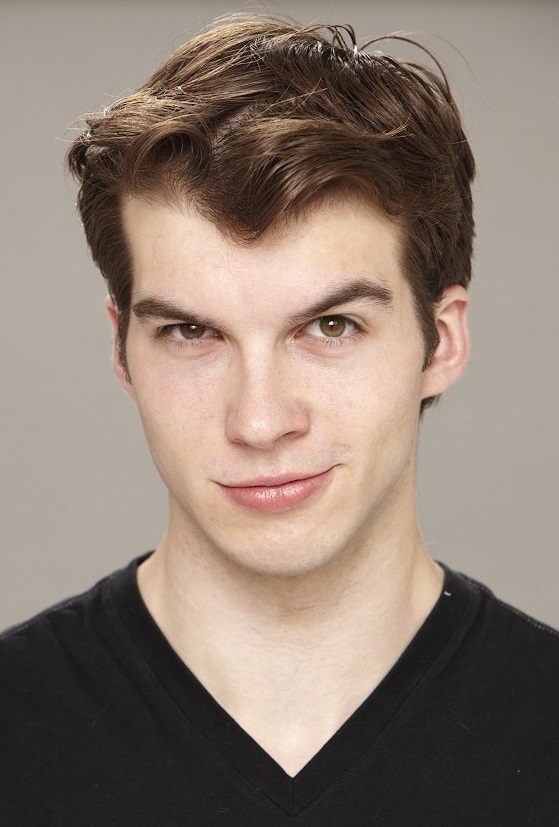

When you type "Adam Hagenbuch Wikipedia" into a search bar, you're probably looking for details about a familiar face from screens big and small. You might be curious about an actor's background, their roles, or perhaps some personal insights. It's a very common thing to do, you know, when someone catches your eye in a show or movie, or maybe you just want to learn a bit more about them.

Yet, sometimes, the internet has a funny way of showing you more than you expect. What if your search for a particular "Adam" leads you down a slightly different path, revealing another significant "Adam" that's shaping our modern world in a completely different way? It's almost like a little puzzle, isn't it?

This article, in a way, is about exploring that very possibility. While we acknowledge your initial interest in Adam Hagenbuch, we're going to take a moment to shine a light on a different "Adam" that, quite honestly, plays a huge part in how many of the digital experiences we enjoy every day actually work. So, let's just see what we discover, shall we?

Table of Contents

- Understanding the "Adam" Search

- The Adam Optimization Algorithm: A Closer Look

- Improvements and Variations: Introducing AdamW

- Beyond Algorithms: Other "Adams" in the Tech World

- Frequently Asked Questions About Optimization

Understanding the "Adam" Search

It's perfectly natural to look up people you admire or are curious about. When you type "Adam Hagenbuch Wikipedia," you're likely seeking biographical information, perhaps a list of acting credits, or details about their personal journey. People often turn to a reliable source like Wikipedia for such information, which is a really good idea for getting quick facts. However, the name "Adam" is, quite obviously, a common one, and it shows up in many different contexts across the vast expanse of the internet.

Sometimes, a search term can lead to unexpected places, especially when a name is shared. In this case, while your mind might be on a particular person, the information we have at hand points to a very different kind of "Adam." This other "Adam" doesn't walk red carpets or appear on television screens. Instead, this "Adam" is a set of instructions, a clever method that helps computers learn things. It's a fundamental piece of how many smart systems we use every day actually operate, which is pretty neat when you think about it.

So, for a moment, let's shift our focus from the human Adam to this technological Adam. It's a fascinating area, and understanding it can give you a fresh perspective on the digital processes happening all around us. You know, it's like discovering a hidden engine behind the scenes.

The Adam Optimization Algorithm: A Closer Look

This "Adam" we're talking about is actually a powerful tool in the world of machine learning, especially when it comes to training really big computer models, like those that power things like facial recognition or language translation. It's a method that helps these models get better at what they do, step by step, by making adjustments to their internal settings. This process of making things better is often called "optimization," and it's a very important part of making artificial intelligence work.

What is This "Adam" Anyway?

The Adam method is a widely used way to make machine learning algorithms, particularly deep learning models, learn more effectively. It was first presented by D.P. Kingma and J.Ba in 2014, so it's been around for a little while, but it's still very much a go-to choice for many researchers and developers. What makes Adam special is that it brings together two rather clever ideas: one is called "momentum," and the other involves "adaptive learning rates." Momentum helps the learning process keep moving in a good direction, sort of like how a ball rolling downhill gains speed. Adaptive learning rates mean that the amount by which the model adjusts itself changes as it learns, rather than staying the same all the time. This makes the learning process much more flexible and efficient, you know, sort of like fine-tuning a guitar string.

Basically, this method helps computer programs learn from huge amounts of information. Think of it like a student trying to figure out a really hard problem. Adam helps that student adjust their approach, sometimes taking big steps, sometimes small ones, depending on how close they are to the right answer. It's a bit like having a smart coach guiding the learning process, which is pretty useful.

How Adam Works: A Simpler Explanation

The Adam method operates quite differently from some older, more traditional ways of training computer models, like something called "stochastic gradient descent," or SGD for short. With SGD, there's typically one single learning rate, which is a fixed number that tells the computer how much to change its internal settings at each step. This learning rate stays the same throughout the entire training process, which can sometimes be a bit rigid. It's like always taking steps of the same size, no matter if you're far from your goal or very close.

Adam, however, is much more adaptable. Instead of using a single, unchanging learning rate, Adam calculates and adjusts the learning rate for each individual setting within the model. It does this by looking at the history of how those settings have changed. Specifically, it considers what are called "first-order gradients," which are basically measurements of how quickly things are changing. By keeping track of these changes, Adam can figure out if it should take bigger steps or smaller steps for different parts of the model. This makes the learning process much more dynamic and responsive, which is a really good thing for getting accurate results. It's almost like having a personalized pace for every part of the learning journey.

This ability to adapt is one of Adam's main strengths. It means the model can learn more quickly and often find better solutions than if it were stuck with a single, unchanging pace. You know, it’s a bit like driving a car that automatically adjusts its speed for different road conditions, rather than just having one speed setting. That makes a lot of sense, doesn't it?

Adam Versus SGD: The Speed and Accuracy Story

In many experiments where people train neural networks, they often see something interesting: the "training loss" for Adam tends to go down faster than it does for SGD. Training loss is a measure of how well the model is doing on the data it's actually learning from. So, in that respect, Adam seems to get to a good point more quickly during the learning phase. This speed can be a big advantage, especially when you're working with very large datasets or complex models that take a long time to train.

However, there's a flip side to this story. While Adam might be faster at reducing the training loss, the "test accuracy" can sometimes be a bit lower than what SGD achieves. Test accuracy is how well the model performs on new data it hasn't seen before, which is a true measure of how well it has actually learned. This difference in test accuracy is something researchers have observed quite often. It's like winning a race quickly but maybe not having the best form at the finish line, you know? The choice of which method to use, therefore, can really impact the final performance of a computer model. For example, a picture showed Adam could lead to nearly three points higher accuracy compared to SGD in some cases, highlighting that picking the right method is very important for getting good results.

Adam tends to settle down to a good solution quite quickly, while SGD with momentum might take a bit longer, but both can eventually reach very good outcomes. The difference often comes down to how they handle tricky spots in the learning landscape, like "saddle points" or getting stuck in "local minima." These are like little dips or flat areas where the learning process can slow down or stop, and Adam has some clever ways to help the model escape these traps and keep moving towards a better overall solution. So, while speed is good, getting to the very best answer is often the main goal.

Why Adam is So Often Chosen

Adam is, quite honestly, considered a rather basic piece of knowledge in the field of machine learning these days. It's so widely adopted that many people just assume it as a starting point for their projects. Its ability to quickly reduce training errors and its general robustness make it a very attractive option for many different kinds of tasks. When you're trying to get a new model up and running, or you're working with limited time, Adam's speed can be a real benefit. It helps get things moving along at a good clip, which is always helpful.

Its ease of use and generally good performance across a wide range of problems mean that it's often the first method people try. It's like a reliable tool that you can almost always count on to do a pretty good job. This widespread acceptance also means there's a lot of community knowledge and support for using Adam, which makes it even more accessible for people who are just starting out or even for seasoned professionals. So, it's a very practical choice for many situations, which is quite important for real-world applications.

Improvements and Variations: Introducing AdamW

As smart as Adam is, people are always looking for ways to make things even better. This is where AdamW comes into the picture. AdamW is an improved version of the original Adam method, designed to fix a specific issue that Adam sometimes had, especially when dealing with something called "L2 regularization." L2 regularization is a technique used to help prevent computer models from becoming too specialized in the data they've seen, which can make them perform poorly on new, unseen data. It's like adding a penalty for overly complex solutions, encouraging the model to find simpler, more general answers.

The original Adam method, in some situations, could weaken the effect of this L2 regularization, which wasn't ideal for getting the most robust models. AdamW was created to directly address this problem. It changes how the regularization is applied, making sure that it works as intended, even with Adam's adaptive learning rates. So, if you're wondering how AdamW is better than Adam, it's mostly about how it handles this specific aspect of training, making for more stable and better-performing models in many cases. It's a subtle but important refinement, kind of like making a small but significant adjustment to a complex machine to make it run more smoothly.

This ongoing refinement of these methods shows how quickly the field of machine learning moves. People are always experimenting, finding little ways to make things more effective. It's a continuous process of learning and building upon what's already there, which is pretty exciting to watch unfold.

Beyond Algorithms: Other "Adams" in the Tech World

It's interesting how the name "Adam" pops up in so many different areas, even within technology itself. While we've spent a good deal of time talking about the Adam optimization algorithm, there's also the "Adam" that appears in the context of audio equipment, specifically high-quality studio monitors. You know, those speakers that sound engineers use to make sure music and sound effects are just right. Companies like JBL, Adam Audio, and Genelec are all well-known for making these kinds of speakers.

Some people might say, "Oh, if you have the money, just get Genelec!" But it's not quite that simple. Just because a speaker is called "Genelec" doesn't mean they're all the same. A Genelec 8030 is very different from a Genelec 8361, or a massive 1237. They're all "Genelec," but they serve different purposes and have vastly different capabilities. The same goes for JBL and Adam Audio. Each company makes a range of products, and each product has its own strengths and uses. It's not about one brand being universally better than another; it's about choosing the right tool for the job. So, when people talk about "JBL Adam Genelec," they're often referring to a category of professional audio tools, and "Adam" in this context is a specific brand known for its own unique sound and design. It's a completely different kind of "Adam" from the algorithm, but it's another example of how a name can be associated with quality and innovation in technology.

This just goes to show that a single name can have many meanings, depending on the context. Whether it's a person, a powerful computer method, or a piece of audio gear, the name "Adam" certainly has a presence in many corners of our modern world, which is rather fascinating, isn't it?

You can learn more about the original Adam optimization paper by D.P. Kingma and J.Ba by searching for their work on academic platforms, which is a really good place to get detailed information.

Learn more about computer learning methods on our site, and you might also be interested in this page about how digital systems improve themselves.

Frequently Asked Questions About Optimization

People often have questions about how these computer learning methods work, especially when comparing different approaches. Here are a few common ones:

Why is Adam optimization often preferred over SGD?

Adam is frequently chosen because it generally helps computer models learn faster and often finds good solutions more quickly during the initial training phases. It does this by adapting its learning pace for different parts of the model, which can be more efficient than SGD's fixed pace. This speed is a big plus for many projects, especially when dealing with large datasets, so it's a very practical choice for many people.

Can Adam's learning rate be adjusted manually?

While Adam automatically adjusts its learning rates for different parameters, you can still set an initial, overall learning rate for the Adam method itself. This initial setting acts as a general scale for all the adaptive adjustments Adam makes. So, yes, you can definitely experiment with that starting value to see how it affects the learning process, which is a common practice when fine-tuning models. You know, it's like giving a general guideline for the smart adjustments Adam will make.

What problem does AdamW solve compared to Adam?

AdamW addresses a specific issue where the original Adam method could sometimes reduce the effectiveness of L2 regularization, a technique used to help models generalize better to new information. AdamW fixes this by separating the L2 regularization from the adaptive learning rate updates, making sure that the regularization works as intended. This leads to models that are often more robust and perform better on unseen data, which is a pretty important improvement for many real-world applications.

Detail Author:

- Name : Cale Powlowski

- Username : joey.hamill

- Email : elijah09@brakus.com

- Birthdate : 1998-01-13

- Address : 2802 Kay Pines Apt. 628 North Ladariusville, NJ 34635

- Phone : 651-740-1086

- Company : Ferry Inc

- Job : Petroleum Pump System Operator

- Bio : Nihil a nisi veritatis aut et architecto eveniet. Aut aut enim numquam sint sequi aliquam. Reiciendis ut aut doloremque sed error corrupti.

Socials

tiktok:

- url : https://tiktok.com/@brakusr

- username : brakusr

- bio : Enim corrupti eligendi quas eum sit exercitationem ut enim.

- followers : 126

- following : 1786

twitter:

- url : https://twitter.com/reyes.brakus

- username : reyes.brakus

- bio : Architecto ut eos aspernatur neque qui. Architecto reiciendis reprehenderit eligendi nesciunt rerum at. Quis nobis est tempore velit quia.

- followers : 5722

- following : 823

instagram:

- url : https://instagram.com/reyes_brakus

- username : reyes_brakus

- bio : Doloremque dolorum eum quas eum voluptas. Et tempora sed autem. Adipisci beatae perferendis nihil.

- followers : 554

- following : 1352

linkedin:

- url : https://linkedin.com/in/rbrakus

- username : rbrakus

- bio : Aut aut accusantium incidunt minus et iste ipsa.

- followers : 6458

- following : 2302

facebook:

- url : https://facebook.com/brakusr

- username : brakusr

- bio : Dolor eligendi similique quia nesciunt voluptate alias esse.

- followers : 4882

- following : 27